When you can’t trust your own eyes and ears to detect deepfakes, who can you trust?

Perhaps, a machine.

University of Florida researcher Damon Woodard is using artificial intelligence methods to develop algorithms that can detect deepfakes — images, text, video and audio that purports to be real but isn’t. These algorithms, Woodard says, are better at detecting deepfakes than humans.

“If you’ve ever played poker, everyone has a tell,” says Woodard, an associate professor in the Department of Electrical and Computer Engineering, who studies biometrics, artificial intelligence, applied machine learning, computer vision and natural language processing.

“The same is true when it comes to deepfakes. There are things I can tell a computer to look for in an image that will tell you right away ‘this is fake.’”

The issue is critical. Deepfakes are a destructive social force that can crash financial markets, disrupt foreign relations and cause unrest and violence in cities. A video, for example, that appears to be a congressman or even the president saying something outrageous and untrue can destabilize foreign and domestic affairs. The potential harm is great, Woodard says.

Some fakes seem innocuous, like photoshopping the model on the cover of Cosmo or adding Princess Leia to a Star Wars sequel, for instance. And for now, imperfections in teeth, hair and accessories provide clues for eagle-eyed skeptics. But images can spread quickly, and it is difficult to get the mind to unsee — and unbelieve — a deepfake, making machines an important ally in the fight against them.

“You could run thousands of these images through and quickly decide ‘this is real, this is not,’” Woodard says. “The training takes time, but the detection is almost instantaneous.”

Deepfakes are just one element of Woodard’s work at the Florida Institute for Cybersecurity Research. Woodard also uses machine learning to analyze online text to establish authorship. Machine learning also is central to Woodard’s research to detect hardware trojans inserted onto circuit boards, which are a national security concern since they are largely manufactured outside the US.

When it comes to online text, the way a person uses language can reveal his identity. Online predators who use chat rooms and other digital communities leave a trail of textual communication. Woodard and his team have come up with ways to identify people solely by the way they compose those online messages.

“You can read a book by your favorite author, and I don’t need to tell you the author, you just know by reading the book; you know the person’s writing style,” Woodard says. “This is kind of the same thing.”

Woodard says the work complements offline physiological means of establishing identity, such as biometric tools like fingerprints, irises and facial scans.

“This gives us the ability to identify that person in cyberspace,” Woodard says.

Another area of national security is hardware trojans. Manufacturing of computing hardware largely occurs outside the US, making it susceptible to manipulation by foreign actors. Image analysis, computer vision and machine learning can help in three ways:

- Detecting when something has been added to hardware that shouldn’t have been.

- Detecting when something is missing that should be present.

- Detecting a modification of something that should not have been modified.

With integrated circuits, Woodard says a computer can use an image to extract important features in all three cases. Circuits can be represented as graphs, and machine learning and deep learning can be applied to do graph analysis, which determines whether a graph is correct or has been modified.

“You want to be sure everything you are looking for is here and what shouldn’t be there isn’t there,”

Woodard says.

With printed circuit boards, an image from a regular optical camera can be used. Computer vision would then be able to tell how many resistors are on the board, how many capacitors, how many connections. The process would be automatic and fast.

These models could have billions of parameters. The research we’re conducting typically involves data sets that are at least a few terabytes nowadays. Before you can even evaluate a model to see if it’s good, it has to finish the training.”

–Damon Woodard

And getting faster, with the help of NVIDIA computing power, Woodard says.

“These models could have billions of parameters,” Woodard says. “The research we’re conducting typically involves data sets that are at least a few terabytes nowadays. Before you can even evaluate a model to see if it’s good, it has to finish the training.”

Supercomputing speeds up research. Just a few years ago, a researcher would have had to choose a direction of inquiry to invest in, perhaps only realizing she needed to go in another direction after the first path had run its course.

With the supercomputing available via NVIDIA and HiPerGatorAI, researchers can explore multiple directions at once, discarding the models that don’t work, refining the models that do.

Pictures and Privacy

The camera on your smartphone actually isn’t all that smart. When you point it, everything in the viewfinder ends up in the photo.

A truly smart camera could edit the scene before it takes the photo, says Sanjeev Koppal, a computer vision researcher in the Department of Electrical and Computer Engineering. Koppal and his team in the Florida Optics and Computational Sensor Laboratory are working on building such cameras, which can be selective about visual attention.

“We want to be intelligent about how we capture data,” Koppal says. “That’s actually how our eyes work. Our eyes move a lot. They’re sort of scanning all the time. And when they do that, they give preferential treatment to some parts of the world around them.”

“I’m interested in building cameras that can be intelligently attentive to the stuff around them.”

The idea of attention is really old in AI, Koppal says. Attention — knowing what to pay attention to — is a sign of intelligence. For animals, that might be food or predators. For a camera, it’s a little more complicated.

A camera that can select what to capture in a scene requires very fast sensors and optics, so that the camera can process the visual cues in a scene quickly. But it also requires algorithms that live in the camera that can change quickly depending on what the camera sees.

We want to be intelligent about how we capture data. That’s actually how our eyes work. Our eyes move a lot. They’re sort of scanning all the time. And when they do that, they give preferential treatment to some parts of the world around them.”

–Sanjeev Koppal

This AI-inside-the-camera approach can be important for safety and privacy. One camera Koppal’s lab built can put more emphasis on sampling for depth for objects of interest. Such an approach is useful for developing more advanced LiDAR, or light detection and ranging, a remote sensing method that relies on laser pulses and is often used for autonomous vehicles.

The advantage of an adaptive, intelligent LiDAR is that it can pay more attention to pedestrians or other cars rather than the pavement in front of it or the buildings that line a roadway. In the lab-built version, fast mirrors change the focus of the camera very quickly, such that it might more easily capture a fast-moving object — a child running into the street, for example.

The training data for street scenes is plentiful and diverse, making it possible to create algorithms that select which features can be dropped out of a street scene while allowing an autonomous vehicle to navigate safely based on the features that need to remain in view. The test, Koppal says, is whether the algorithm — given a new image — knows what to pay attention to.

The camera uses tiny mirrors that move so fast it’s like having two cameras trained on a scene. The goal, Koppal says, is to develop a camera that can process a complicated scene, such as 10 pedestrians walking at a distance. The tiny mirrors can flip between the distant images, making sense of the scene better than today’s LiDAR.

“The question becomes, where do you point this?” Koppal says. “That’s where intelligent control and the AI come in.”

The camera would need to be taught to pay more attention to the group of pedestrians, for example, than to the trees lining the sidewalk.

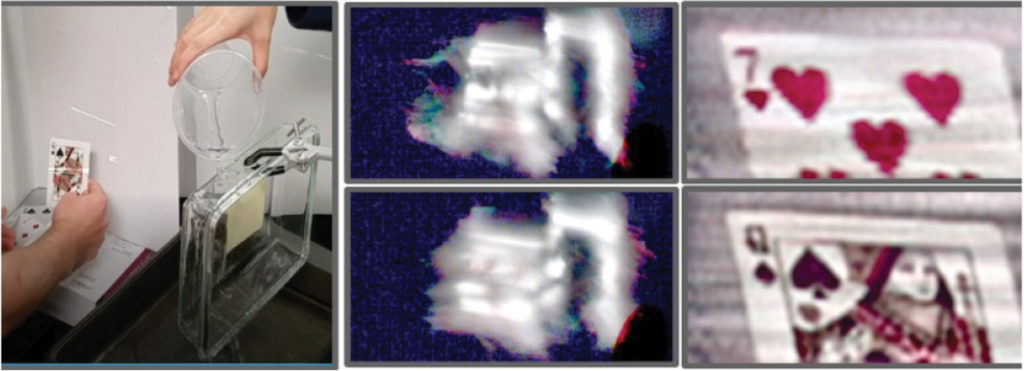

Another camera in Koppal’s lab can see better than humans, even around corners, and detect images not visible to the human eye. The camera uses a laser that bounces off of objects hidden from human view and travels back to the camera, where the light is analyzed to determine what is hidden. The camera can use the same technique to sharpen images that would be blurry to the human eye.

“Imagine it’s raining on your windshield, and you can’t see anything,” Koppal says. “This camera can still see. It could help you. It gives you one more chance to see the same scene.”

While much of the focus is on cameras that can do more than the human eye, equally important work is being done on cameras that perceive less than the human eye, Koppal says. Prototypes of these cameras create privacy inside the camera while the image is being formed.

“It’s not a small point,” Koppal says. “If you capture an image, the data exists somewhere and now you have to remove the faces if you want to protect privacy. I say, don’t capture it at all, and the images you end up with are already private.”

In some situations, there is a need to monitor people, but without identifying them, such as an assembly line, where a company may want to monitor employees’ safety without identifying individual employees. Another example of the need to see some things but not others is the humble household robot, such as a Roomba vacuum cleaner.

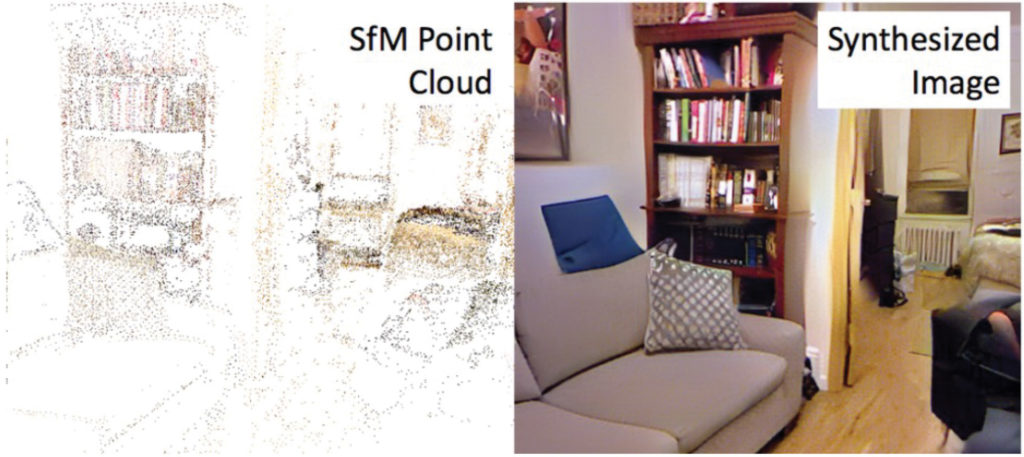

Koppal says any robot in the home could be picking up sensitive information along with household dirt. A robot navigates a home by a method called SLAM, simultaneous localization and mapping, which used blue and red point cloud files which are low resolution and don’t look like much. The images are usually deleted after a short period.

“It’s private, just this blue and red thing. But we showed that you can invert this to get back the image and see somebody’s house. You can see what kind of products they use, and you could imagine if there was paper and enough of the dots, you could read their taxes,” Koppal says.

“Your robot reading your taxes is unacceptable.”

Sanjeev Koppal’s lab developed a lightweight projector and algorithm that can see objects obscured around a corner or by opacity, such as rainwater on a windshield. At right, a projector scans the scene as a camera looks from the side through water-covered glass. The system allows the camera to pick up the images on the playing cards.

Fixing the issue of the nosy robot would require a camera Koppal’s lab has designed that can learn how to be good at some tasks — navigating your living room — but bad at others — like reading your taxes.

“This is pure AI,” Koppal says.

Such a camera can preserve privacy while fostering security. For instance, if a line of people is going through a metal detector at a courthouse, the camera can avoid capturing their faces. But if the camera detects a gun or knife, that signals the camera to capture the face and show the identity of the person carrying the weapon.

“It’s easy to fool a human. You just put a black box over a face. But it’s not easy to fool a machine, especially AI machines,” Koppal says. “Data that may not look like anything to us can be read by a machine.”

Privacy, Koppal says, is something people often don’t value until they lose it. In what he calls the “camera culture” of his lab, the researchers like to think about the future of camera technology.

“In the future, there’ll be trillions of very small connected cameras,” Koppal says. “So, I think it’s important, before those cameras are built, to think about how they function in the world. This is the time.”

Source:

Damon Woodard

Associate Professor of Electrical

and Computer Engineering

dwoodard@ufl.edu

Sanjeev Koppal

Assistant Professor of Electrical

and Computer Engineering

sjkoppal@ece.ufl.edu

Hear the Story

The audio version of this story is available on our YouTube.